Meanwhile, the minority neural net tendency used statistical techniques and differential equations.

It's hard to imagine two more discordant cultures.

In these days of the overarching victory of the latter, I was interested to read the following from the excellent overview book, "Statistics: A Very Short Introduction" (David J. Hand), page 104.

"In fact, logistic regression can be regarded as the most basic kind of neural network."I confess I had never thought of neural networks as simply a mainstream statistical classification tool.

Wikipedia has two articles on the subject: "Discriminant function analysis" and "Linear discriminant analysis" along with "Logistic Regression".

Something to look at further.

---

Marr's Tri-Level Hypothesis

David Marr was one of my heroes when I was an active AI researcher. Outside of computer vision I think he is mostly forgotten now (he died tragically early), but he said something important about methodology in AI research when many around him were writing programs that did vaguely cool stuff while claiming they were advancing science.

Marr distinguished three levels of analysis.

- computational level: what does the system do (e.g.: what problems does it solve or overcome) and similarly, why does it do these things

- algorithmic/representational level: how does the system do what it does, specifically, what representations does it use and what processes does it employ to build and manipulate the representations

- implementational/physical level: how is the system physically realised (in the case of biological vision, what neural structures and neuronal activities implement the visual system).

His terms are not great (he was trained as a biologist): his computational level is really the theory of system behaviour in the environment of interest; his second level might be better described as an architectural level, describing the various ways the system's capabilities could be decomposed into subsystems and their inter-relationships; finally comes the issue of specific processing mechanisms and algorithms.

It's still common to see people waving the banner for one of these elements of analysis, while ignoring the others. Only confusion results.

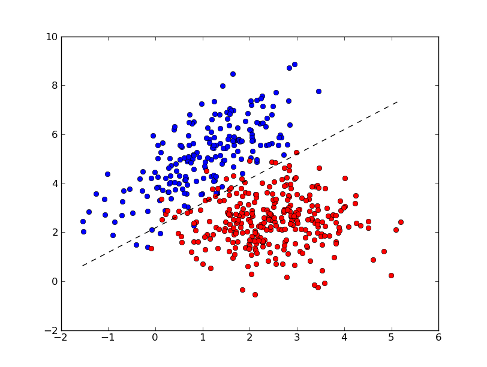

Neural networks are an architecture. As currently understood and built, the term denotes a distributed, connected computational architecture well-suited to a certain class of problems, namely pattern recognition, feature extraction and classification.

This is a proper subset of the cognitive problems animals (including humans) have to solve in the world.

Artificial neural networks today consume Terabytes of training data, solving recognition/ classification problems of interest to Google, Facebook and the like.

I am reminded of the man who has a hammer.

The easy wins will fade away well before they achieve the purported Holy Grail of Artificial General Intelligence.

I hasten to add the obvious: in any event, you and I are considerable more than arid and cerebral AGIs.

No comments:

Post a Comment

Comments are moderated. Keep it polite and no gratuitous links to your business website - we're not a billboard here.